The Visionary Glasses is a wearable device designed to assist visually impaired individuals by providing real-time environmental awareness. The glasses will include camera technology, microprocessor capabilities, and AI models to detect, analyze, and communicate the surroundings to the user. By offering auditory feedback about nearby objects and their distances, the glasses will allow users to navigate their environments more independently and confidently.

By effective use of AI technology to provide not only spatial awareness but also detailed insights into objects, the Visionary Glasses significantly enhance the independence and quality of life for visually impaired users.

The Visionary Glasses is currently under development, with ongoing research and testing to refine its features and optimize its usability for visually impaired individuals. The project aims to integrate cutting-edge technology while ensuring user-friendly design and functionality.

Use Case Example: When the user wears the glasses and powers them on, the Raspberry Pi camera begins streaming the environment. The live video is processed in real-time by the AI model running on the microprocessor. Objects and their distances are identified, and verbal cues are provided through the speaker. For instance, if the user approaches a chair 2 meters ahead, the glasses will say, “Chair 2 meters ahead.”

Additionally, the glasses can identify and describe items such as food, providing details like ingredients, or car parts, specifying their function. This allows the user to navigate safely and gain deeper insights into their surroundings.

Possible Key Features:

- Camera Integration:

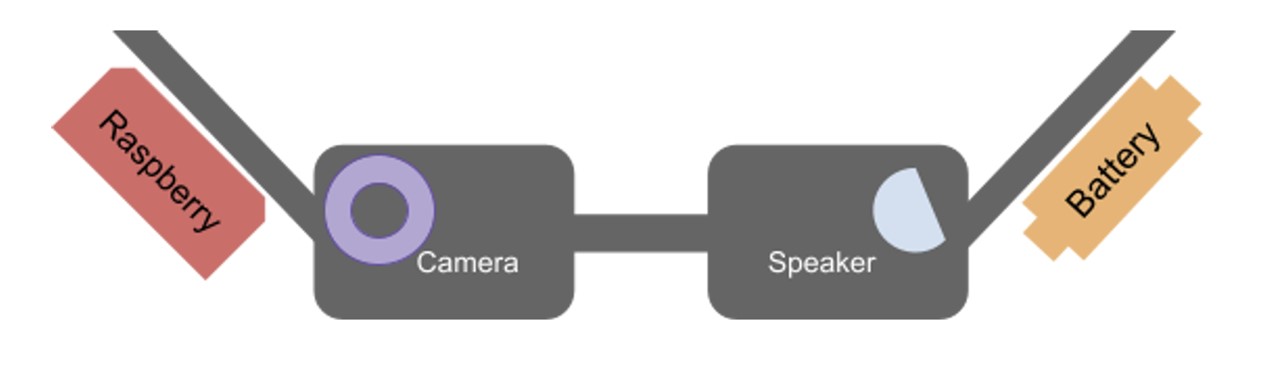

- Equipped with a Raspberry Pi camera module mounted on the glasses frame.

- Streams live video data of the user’s surroundings.

- AI-Powered Object Detection:

- Utilizes AI models hosted on the onboard microprocessor to analyze the camera feed.

- Identifies objects and obstacles in real-time.

- Estimates distances to detected objects for spatial awareness.

- Auditory Feedback:

- Converts visual data into verbal descriptions using text-to-speech (TTS) technology.

- Provides information about the environment, such as:

- “Chair 2 meters ahead.”

- “Obstacle to your left at 1.5 meters.”

- Microprocessor Integration:

- Processes data using a Raspberry Pi or similar microprocessor.

- Handles object recognition, distance estimation, and audio output seamlessly.

- Energy Efficiency:

- Designed for prolonged use with optimized battery consumption.

- Portable and lightweight for user convenience.